Some time in the 1980s, my father said that he had always been opposed to the Vietnam War. My brother asked, appropriately enough, “Then who the hell was that man in our house in the 60s?”

That story is a little gem of how persuasion happens, and how people deny it.

I have a friend who was raised in a fundagelical world, who has changed zir mind on the question of religion, and who cites various studies to say that people aren’t persuaded by studies. That’s interesting.

For reasons I can’t explain, far too much research about persuasion involves giving people who are strongly committed to a point of view new information and then concluding that they’re idiots for not changing their minds. They would be idiots for changing their mind because they’re given new information while in a lab. They would be idiots for changing their mind because they get one source that tells them that they’re wrong.

We change our minds, but, at least on big issues, it happens slowly, due to a lot of factors, and we often don’t notice because we forget what we once believed.

Many years ago, I started asking students about times they had changed their minds. Slightly fewer many years ago, I stopped asking because I got the same answers over and over. And what my students told me was much like what books like Leaving the Fold, books by and about people who have left cults, changed their minds about Hell or creationism, and various friends said. They rarely described an instance when they changed their mind on an important issue because they were given one fact or one argument. Often, they dug in under those circumstances—temporarily.

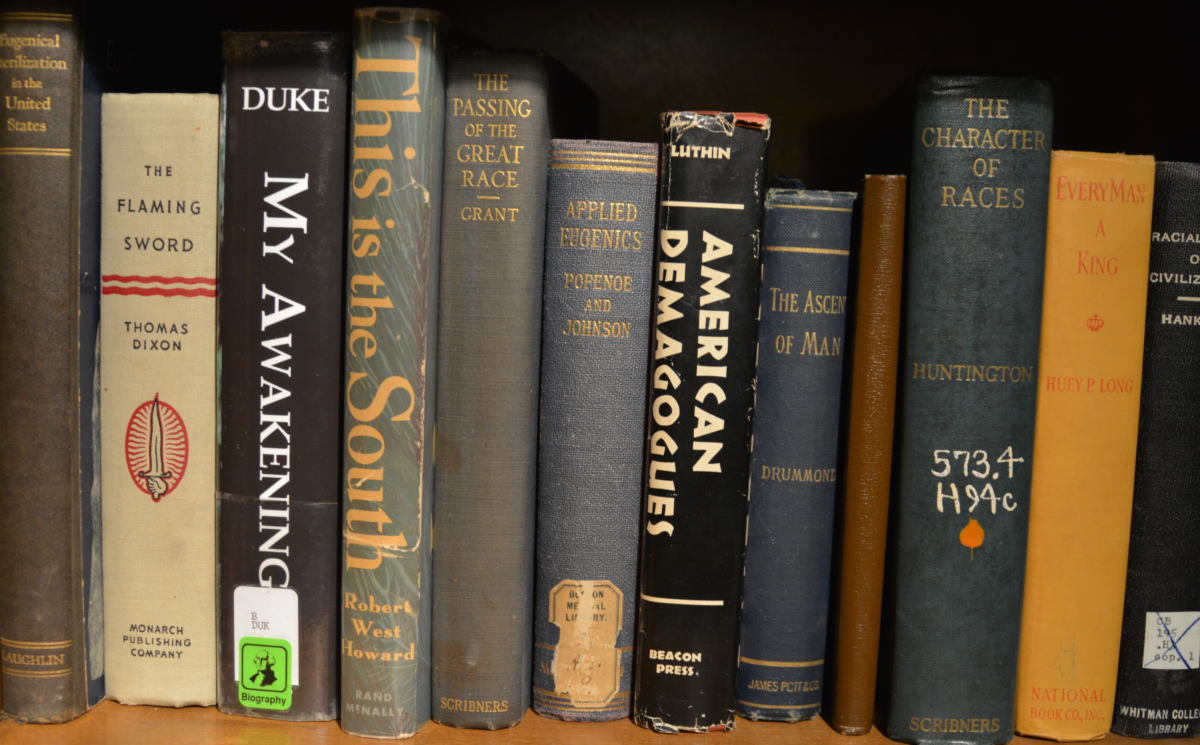

But we do change our minds, and there are lots of ways that happens, and the best of them are about a long, slow process of recognition that a belief is unsustainable.[1] Rob Schenck’s Costly Grace reads much like memoirs of people who left cults, or who changed their minds about evolution or Hell. They heard the counterarguments for years, and dismissed them for years, but, at some point, maintaining faith in creationism, the cult, the leader of the cult, just took too much work.

But why that moment? I think that people change their minds in different ways partially because our commitments come from different passions.

In another post I wrote about how some people are Followers. They want to be part of a group that is winning all the time (or, paradoxically, that is victimized). They will stop being part of that group when it fails to satisfy that need for totalized belonging, or when they can no longer maintain the narrative that their group is pounding on Goliath. At that point, they’ll suddenly forget that they were ever part of the group (or claim that, in their hearts, they always dissented, something Arendt noted about many Germans after Hitler was defeated).

Some people are passionate about their ideology, and are relentless at proving everyone else wrong by showing, deductively, that those people are wrong. They do so by arguing from their own premises and then cherry-picking data to support that ideology. They deflect (generally through various attempts at stasis shift) if you point out that their beliefs are non-falsifiable. These are the people that Philip Tetlock described as hedgehogs. Not only are hedgehogs wrong a lot—they don’t do better than a monkey throwing darts—but they don’t remember being wrong because they misremember their original predictions. The consequence is that they can’t learn from their mistakes.

Some people have created a career or public identity about advocating a particular faction, ideology, product, and are passionate about defending every step into charlatanism they take in the course of defending that cult, faction, ideology. Interestingly enough, it’s often these people who do end up changing their minds, and what they describe is a kind of “straw that breaks the camel’s back” situation. People who leave cults often describe a sudden moment when they say, “I just can’t do this.” And then they see all the things that led up to that moment. A collection of memoirs of people who abandoned creationism has several that specifically mention discovering the large overlap in DNA between humans and primates as the data that pushed them over the edge. But, again, that data was the final push–it wasn’t the only one.

Some people are passionate about politics, and about various political goals (theocracy, democratic socialism, libertarianism, neoliberalism, anarchy, third-way neoliberalism, originalism) and are willing to compromise to achieve the goals of their political ideology. In my experience, people like this are relatively open to new information about means, and so they look as though they’re much more open to persuasion, but even they won’t abandon a long-time commitment because of one argument or one piece of data—they too shift position only after a lot of data.

At this point, I think that supporting Trump is in the first and third category. There is plenty of evidence that he is mentally unstable, thin-skinned, corrupt, unethical, vindictive, racist, authoritarian, dishonest, and even dangerous. There really isn’t a deductive argument to make for him, since he doesn’t have a consistent commitment to (or expression of) any economic, political, or judicial theory, and he certainly doesn’t have a principled commitment to any particular religious view. It’s all about what helps him in the moment, in terms of his ego and wealth. That’s why defenders of his keep getting their defenses entangled, and end up engaging in kettle logic. (I never borrowed your kettle, it had a whole in it when I borrowed it, and it was fine when I returned it.)

The consequence of Trump’s pure narcissism (and mental instability) and lack of principled commitment to any consistent ideology is that Trump regularly contradicts himself, as well as talking points his supporters have been loyally repeating, abandons policies they’ve been passionately advocating on his behalf, and leaves them defending statements that are nearly indefensible. What a lot of Trump critics might not realize is that Trump keeps leaving his loyal supporters looking stupid, fanatical, gullible, or some combination of all three. He isn’t even giving them good talking points, and many of the defenses and deflections are embarrassing.

For a long time, I was hesitant to shame them, since an important part of the pro-GOP rhetoric is that “libruls” look down on regular people like them. I was worried that expressing contempt for the embarrassingly bad (internally contradictory, incoherent, counterfactual, revisionist) talking points would reinforce that talking point. And I think that’s a judgment that people have to make on an individual basis, to the extent that they are talking about Trump with people they know well—should they avoid coming across as contemptuous?

But for strangers, I think that shaming can work because it brings to the forefront that Trump is setting his followers up to be embarrassed. That means he is, if not actually failing, at least not fully succeeding at what a leader is supposed to do for his followers. The whole point in being a loyal follower is that the leader rewards that loyalty. The follower gets honor and success by proxy, by being a member of a group that is crushing it. That success by proxy comes from Trump’s continual success, his stigginit to the libs, and his giving them rhetorical tactics that will make “libs” look dumb. Instead, he’s making them look dumb. So, pointing out that their loyal repetition of pro-Trump talking points is making them look foolish is putting more straw on that camel’s back.

Supporting Trump, I’m saying, is at this point largely a question of loyalty. Pointing out that their loyalty is neither returned nor rewarded is the strategy that I think will eventually work. But it will take a lot of repetition.

[1] Conversions to cults, otoh, involve a sudden embrace of this cult’s narrative, one that erases all ambiguity and uncertainty.