Tom Cotton has proposed a bill that would prohibit Federal funds being used to support the teaching of the NY Times The 1619 Project. He said, “As the Founding Fathers said, [slavery] was the necessary evil upon which the union was built, but the union was built in a way, as Lincoln said, to put slavery on the course to its ultimate extinction.”

This will shock absolutely no one, but I don’t think Tom Cotton has any idea what he’s talking about. The irony is that he is trying to take the scholarly highground, as though his objection to The 1619 Project is that it is factually and historically flawed, when, in fact, his argument is factually and historically flawed. I’m not sure I’d call his history revisionist, as much as puzzling and uninformed.

“The Founding Fathers” is a vague term, but Cotton seems to be using it to include the authors of the Constitution. (I’m not sure if he’s including others, as he seems to be, so I’ll put “the Founders” and “Founding Fathers” in quotation marks.) The Constitution was a document of compromise agreed to by people with widely divergent views on various topics, especially slavery. Therefore, it doesn’t really make sense to attribute one view about slavery to “the Founders”—there wasn’t one.

Even the same Founder could have different views. Jefferson, at the time of the founding, was what is generally called a “restrictionist”—slavery should be restricted to the existing slave states, as such a restriction would cause it to die out (as might well have been true). By 1819, however, he was describing slavery as a “wolf by the ear situation.” In 1820, he wrote in a letter, “We have the wolf by the ear, and we can neither hold him nor safely let him go. Justice is in one scale and self-preservation in the other.” Justice demands abolition, but Jefferson (like many people) worried that freed slaves would wreak vengeance on their oppressors. By 1820, Jefferson was in favor of expansion of slavery (and he was still a Founder).

It’s fair to characterize Jefferson’s view (in 1820) as an instance of the “necessary evil” topos, a defense of slavery common in the early 19th century. But that wasn’t his view at the time of the founding. And it certainly isn’t accurate to say that the Founders “put the evil institution on a path to extinction”—that certainly wasn’t what most of them were trying to do. Many of them (most?) hoped to preserve it eternally. In fact, as late as 1860, there remained the view that the Constitution guaranteed slavery, and that abolishing slavery would require a new Constitution. In 1850, the abolitionist William Lloyd Garrison burned a copy of the Constitution for exactly that reason, calling it “a covenant with death, and an agreement with hell.”

Cotton ignores several other important points. First, the “necessary evil” argument wasn’t very popular at the time of the Revolution or writing of the Constitution. In that era, it was more common for defenders of slavery to use the argument that slavery brought Christianity and civilization to slaves, and was therefore a benefit. By the early part of the 19th century, that argument became increasingly implausible, as manumission was increasingly prohibited (for more than you probably wanted to know about the 19th century history of arguments for slavery, see here). It’s at that point that one gets the “necessary evil” argument (which was never the only way that slavers talked about slavery). And, at that point, it was never an argument for abolishing slavery, let alone for it being “a necessary evil upon which the union was built.” I have no idea whom he thinks said that. I can’t think of anything a Founder said that could be interpreted as saying slavery was some kind of necessary phase through which the US had to pass.

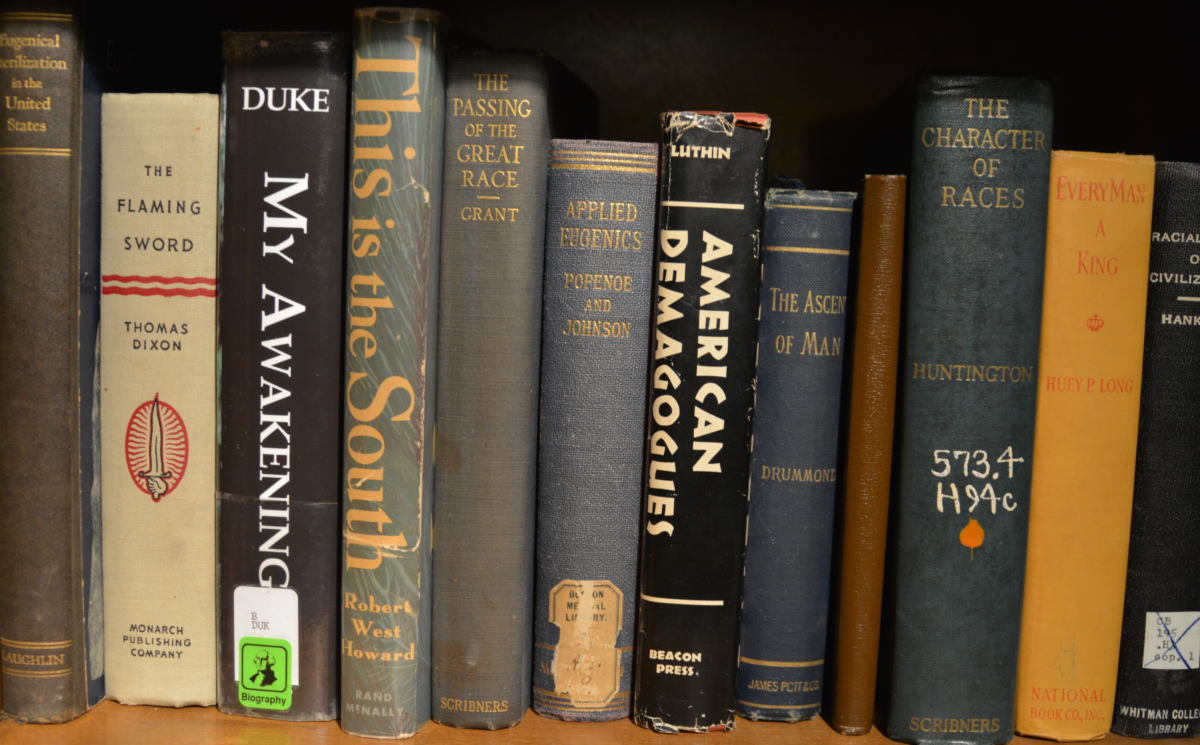

To be blunt, I think Cotton has no clue what the “necessary evil” argument actually was. Robert Walsh’s 1819 An Appeal from the Judgments of Great Britain has a passage perfectly exemplifying the “necessary evil” argument:

“We do not deny, in America, that great abuses and evils accompany our negro slavery. The plurality of the leading men of the southern states, are so well aware of its pestilent genius, that they would be glad to see it abolished, if this were feasible with benefit to the slaves, and without inflicting on the country, injury of such magnitude as no community has every voluntarily incurred. While a really practicable plan of abolition remains undiscovered, or undetermined; and while the general conduct of the Americans is such only as necessarily results from their situation, they are not to be arraigned for this institution.” (421)

This is essentially Jefferson’s argument—it’s evil, but there’s nothing we can do about it. Zephaniah Kingsley called slavery an “iniquity [that] has its origin in a great, inherent, universal and immutable law of nature” (14, A Treatise on the Patriarchal, or Co-operative System of Society As It Exists in Some Governments, and Colonies in America, and in the United States, Under the Name of Slavery, with Its Necessity and Advantages, 1829). Alexander Sims, in A View of Slavery (1834) said, “No one will deny that Slavery is a moral evil” (“Preface”). James Trecothick Austin, the Massachusetts Attorney General, wrote a response to Channing’s anti-slavery book called, appropriately enough, Remarks on Dr. Channing’s “Slavery.” Austin argued that slavery could never be abolished, and then said, “I utter the declaration with grief; but the pain of the writer does not diminish the truth of the fact” (25). The necessary evil line of defense is a self-serving fatalism about slavery–while pronouncing it evil (and thereby showing that one has the right feelings), this position precludes any action to end slavery: “Public sentiment in the slave-holding States cannot be altered” (Austin 24). What Cotton doesn’t understand is that the necessary evil argument was an argument for a fatalistic submission to the eternal presence of slavery.

Cotton doesn’t seem to be the sharpest pencil in the drawer, insofar as he seems not to understand his own argument. The necessary evil argument says slavery is evil. Cotton’s argument is that The 1619 Project is inaccurate because it presents the US as “an irredeemably corrupt, rotten and racist country,” which isn’t my read of the project at all. Oddly enough, were Cotton right, if the “Founding Fathers” had said that slavery “was the necessary evil upon which the union was built” (as he claims) then they would have been endorsing the major point of The 1619 Project, that slavery is woven into US history from the beginning. The Founders didn’t say that, but Cotton seems to think it’s true, so I’m not even sure what his gripe with the project is. He seems to me to be endorsing its argument while thinking he’s disagreeing? (Has he actually looked at it?)

Walsh also seems not to understand Lincoln’s argument(s) on slavery. In 1858, during the Lincoln-Douglas debates, Lincoln argued that “it was the policy of the founders to prohibit the spread of slavery into the new territories of the United States,” but Lincoln wasn’t claiming that “the founders” were opposed to the spread of slavery into all territories. As mentioned above, the “founders” had a lot of different views; Lincoln means specifically the Northwest Ordinance of 1787 which, among other things, prohibited the expansion of slavery above a certain point. (Sometimes people cite Lincoln’s 1854 speech as though it’s about the Founders, but it isn’t—it’s about the policies regarding the expansion of slavery from 1776 to 1849. Lincoln never uses the term “Founders” in that speech because he isn’t talking about them.) In both those speeches, Lincoln was talking about the expansion of slavery into states above the line established by the Northwest Ordinance, in that territory. He wasn’t a fool. He knew that Kentucky (1792), Tennessee (1796), Louisiana (1812), Mississippi (1817) and various other states had been admitted as slave states by the generation that Cotton seems to want to call “Founders.”

So, here are some of the things that Cotton gets wrong. There wasn’t a view that “the Founders” had about slavery; the Constitution didn’t put slavery on a path to extinction (and the “Founding Fathers” certainly didn’t see it that way); the “necessary evil” argument was an argument for fatalistic submission to the possibly eternal presence of slavery, not an argument for its abolition, let alone benefit for the country; even the necessary evil line of defense admitted that slavery was evil; I don’t know of any Founders who argued that slavery was a necessary phase for the country to go through; that certainly wasn’t Lincoln’s argument; The 1619 Project doesn’t present the US as irredeemable.

But he’s right that slavery was evil.